vLLM RBLN: A vLLM Plugin for RBLN NPU¶

vLLM RBLN(vllm-rbln) is a hardware plugin for the vLLM library that delivers high-performance large language model inference and serving on RBLN NPUs.

How to install¶

Before installing vLLM RBLN, ensure that you have installed the latest versions of the required dependencies, including rebel-compiler and optimum-rbln.

You can install vLLM RBLN either installing directly from PyPI or building from source.

Install using PyPI¶

To install the latest release via pip:

Install from source codes¶

1. Clone the vllm and vllm-rbln repositories¶

Please note that the version number of vllm does not necessarily match the version number required by vllm-rbln.

2. Install vllm¶

Setting VLLM_TARGET_DEVICE=empty allows you to build vLLM without specifying a target device during installation.

3. Install vllm-rbln¶

For details on the latest version and changes, refer to the Release Notes.

Note

As of version v0.8.1, vllm-rbln has migrated to the new plugin system. From this version onward, installing vllm-rbln will automatically pull in vllm as a dependency.

In earlier versions (e.g., v0.8.0), the vllm-rbln package does not depend on the vllm package, and installing both may cause conflicts or runtime issues. If you installed vllm after vllm-rbln, please reinstall the vllm-rbln to ensure proper functionality.

Tutorials & Features¶

To help users get started with vLLM RBLN, we have created multiple comprehensive tutorials demonstrating its capabilities and diverse deployment options:

- Model Tutorials: Step-by-step tutorials demonstrating how to run vLLM RBLN with representative models

- Feature Descriptions: Explanations of key vLLM RBLN capabilities, covering various execution modes and server functionalities

Design Overview¶

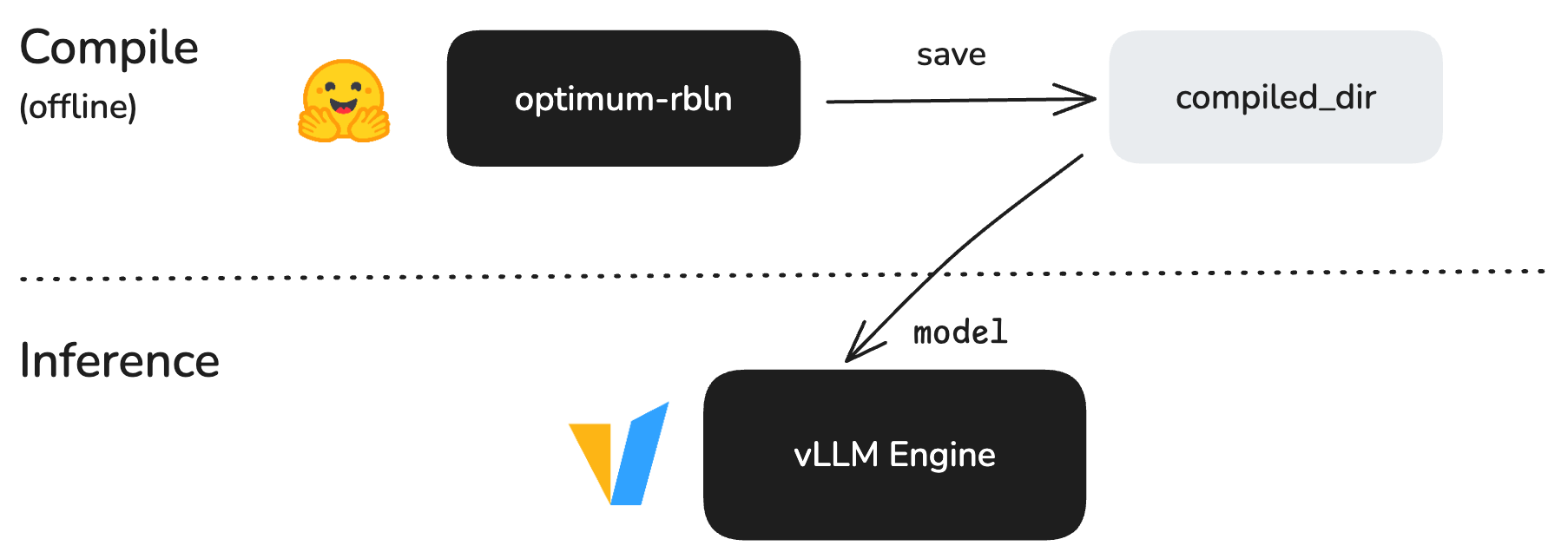

The initial design of vLLM RBLN integrates with optimum-rbln. In this setup, models are first compiled using optimum-rbln, and the resulting compiled model directory is then used by vLLM via the model parameter. This remains the default implementation for now, offering a stable and proven workflow while a new architecture is under active development.

All tutorials are currently based on this design.

Migration to Torch Compile-Based Integration¶

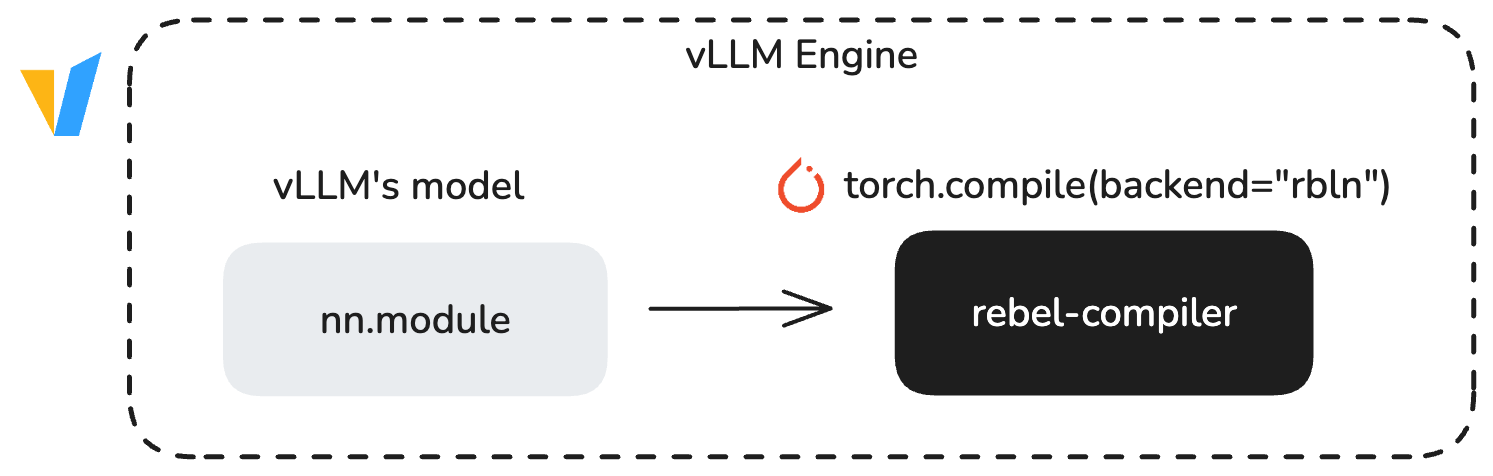

We are actively migrating toward a new architecture that leverages torch.compile() and natively integrates with vLLM's APIs and model zoo. This new design eliminates the need for a separate compilation step, offering a more seamless and intuitive user experience through standard vLLM workflows.

With torch.compile(), the first run is a cold start, during which the model is compiled. Once compiled, the result is cached—enabling subsequent runs to become warm starts, which are faster and benefit from the optimized compiled artifacts.

V1 Engine Support¶

vLLM RBLN supports the V1 engine. The system uses V1 by default, but you can enable V0 by setting the environment variable VLLM_USE_V1=0. Note that pooling models are only available in V1.

Supported Models¶

The following table presents the comprehensive lineup of models currently supported by vLLM RBLN.

Decoder-only Models¶

| Architecture | Example Model Code |

|---|---|

| RBLNLlamaForCausalLM | Llama-2/3 |

| RBLNGemmaForCausalLM | Gemma |

| RBLNGemma2ForCausalLM | Gemma2 |

| RBLNPhiForCausalLM | Phi-2 |

| RBLNOPTForCausalLM | OPT |

| RBLNGPT2LMHeadModel | GPT2 |

| RBLNMistralForCausalLM | Mistral |

| RBLNExaoneForCausalLM | EXAONE-3/3.5 |

| RBLNQwen2ForCausalLM | Qwen2/2.5 |

| RBLNQwen3ForCausalLM | Qwen3 |

Encoder-Decoder Models¶

| Architecture | Example Model Code |

|---|---|

| RBLNT5ForConditionalGeneration | T5 |

| RBLNBartForConditionalGeneration | BART |

| RBLNWhisperForConditionalGeneration | Whisper |

Upcoming Change

In a future release with vLLM v0.11.x, V0 support will be deprecated.

As a result, Whisper will be the only supported encoder–decoder model, and support for all other encoder–decoder models will be removed.

For more information, see the vLLM V1 User Guide.

Multimodal Language Models¶

| Architecture | Example Model Code |

|---|---|

| RBLNLlavaNextForConditionalGeneration | LlaVa-Next |

| RBLNQwen2VLForConditionalGeneration | Qwen2-VL |

| RBLNQwen2_5_VLForConditionalGeneration | Qwen2.5-VL |

| RBLNIdefics3ForConditionalGeneration | Idefics3 |

| RBLNGemma3ForConditionalGeneration | Gemma3 |

| RBLNLlavaForConditionalGeneration | Llava |

| RBLNBlip2ForConditionalGeneration | BLIP2 |

| RBLNPaliGemmaForConditionalGeneration | PaliGemma |

| RBLNPaliGemmaForConditionalGeneration | PaliGemma2 |

Pooling Models¶

| Architecture | Example Model Code |

|---|---|

| RBLNT5EncoderModel | T5Encoder-based |

| RBLNBertModel | BERT-based |

| RBLNRobertaModel | RoBERTa-based |

| RBLNXLMRobertaModel | XLM-RoBERTa-based |

| RBLNXLMRobertaForSequenceClassification | XLM-RoBERTa-based |

| RBLNRobertaForSequenceClassification | RoBERTa-based |

| RBLNQwen3ForCausalLM | Qwen3-based |

| RBLNQwen3Model | Qwen3-based |