Custom Kernel¶

Overview¶

vLLM RBLN supports custom kernels to enable fine-grained performance optimization on RBLN NPUs. At present, Triton is the supported interface for authoring custom kernels on RBLN.

Installation¶

No separate installation step is required for Triton. Triton support is integrated into the RBLN compiler (rebel-compiler), and installing the RBLN compiler toolchain is sufficient to build custom kernels.

For installation instructions, see the Installation guide.

Compilation Flow¶

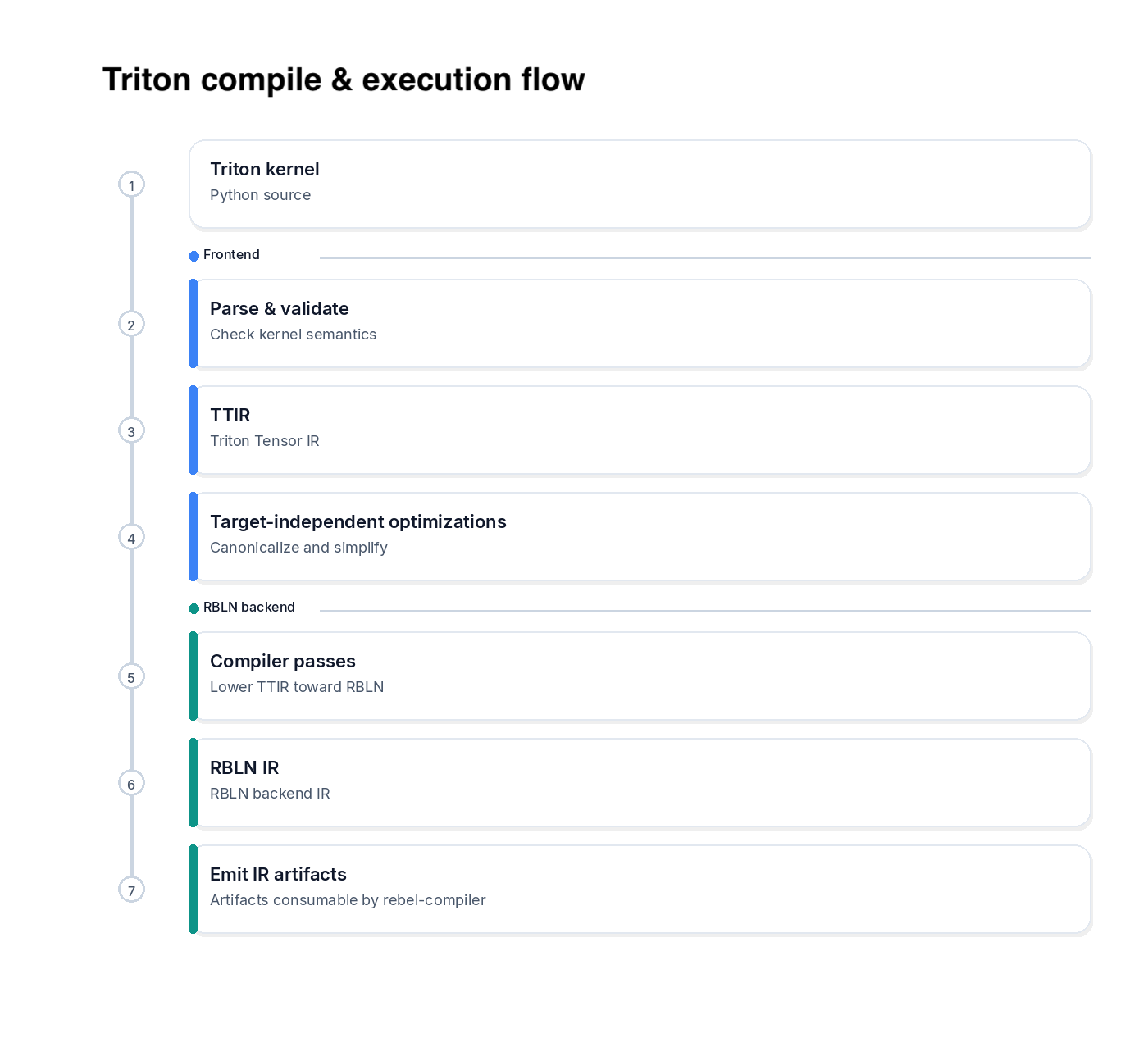

When you write and run a custom kernel, Triton compiles a custom kernel into RBLN IR artifacts (intermediate files consumed by the RBLN compiler). The RBLN compiler then compiles those artifacts into a target binary and runs it on the device.

The compilation pipeline is split into:

- A frontend that performs target-independent analysis and optimization.

- An RBLN backend that lowers Triton IR to RBLN-specific forms and emits artifacts consumable by

rebel-compiler.

The compilation flow is:

-

Frontend (target-independent)

- Parse the custom kernel and validate its semantics (shapes, pointer usage, and constraints required by the compiler).

- Lower the custom kernel into Triton Tensor IR (TTIR), a target-independent intermediate representation used for further compilation.

- Apply target-independent optimizations (e.g., canonicalization and simplification) before target-specific lowering.

-

RBLN backend (target-specific)

- Run RBLN-specific compiler passes to make the program executable on RBLN NPU (target-specific lowering).

- Convert TTIR into RBLN IR, the backend IR consumed by the RBLN Compiler.

- Apply hardware-specific optimizations (e.g., layout and scheduling decisions) to match RBLN constraints.

- Emit IR artifacts (files/objects) that the toolchain can compile into a target binary and execute.

Basic Custom Kernel Writing Guide¶

This section demonstrates usage with flash_attention_naive_prefill as an example, which is used in vLLM RBLN. The related code can be found in flash attention.

How to use a custom kernel¶

1. Defining a Custom Kernel with Triton¶

Kernels are defined with @triton.jit. Kernel behavior should be implemented inside the function.

2. Custom Kernel Compilation (warmup)¶

Kernels written in Triton are lowered by the Triton compiler into RBLN IR, which is consumed by rebel-compiler. To trigger this compilation step and generate the required IR artifacts, run the Triton-provided warmup function.

3. PyTorch Operator Registration (triton_op, register_fake)¶

Register the Triton kernel as a PyTorch custom operator so it can be invoked via torch.ops.rbln_triton_ops.

4. Operator Invocation (torch.ops.rbln_triton_ops)¶

At runtime, invoke the registered custom kernel via torch.ops.rbln_triton_ops.<user_kernel_name>. In this example, the operator name is flash_attention_naive_prefill.

How to write custom kernels¶

Guidelines for writing custom kernels¶

-

Use

tl.static_rangefor loops- Loops operate with a fixed number of iterations. Use

tl.static_range(...)andtl.constexprparameters. - Dynamic loops using

tl.rangeare currently not supported.

- Loops operate with a fixed number of iterations. Use

-

Set

keep_dims=Truefor reductions (tl.max,tl.sum)- Reduction results in attention kernels are commonly broadcast back to the original tensor rank.

- Use

keep_dims=Trueto preserve dimensions and make broadcasting explicit.

-

In this example, the warmup

gridis compile-time only- Warmup is used solely for compilation and IR artifact generation; runtime execution is handled by the RBLN toolchain.

- Use a minimal placeholder such as

grid=(1,).

Supported Ops¶

For the list of currently supported operations, see Supported Ops. The supported operator set will expand over time.

In addition to standard Triton operations, RBLN also provides specialized operations tailored to RBLN NPU hardware. For details, see Specialized Operations.

vLLM RBLN Custom Kernel Examples¶

Currently, vLLM RBLN supports the following custom kernels.